When a security incident slips through defenses, the first reaction is almost always the same: “Why didn’t the tool catch this?”

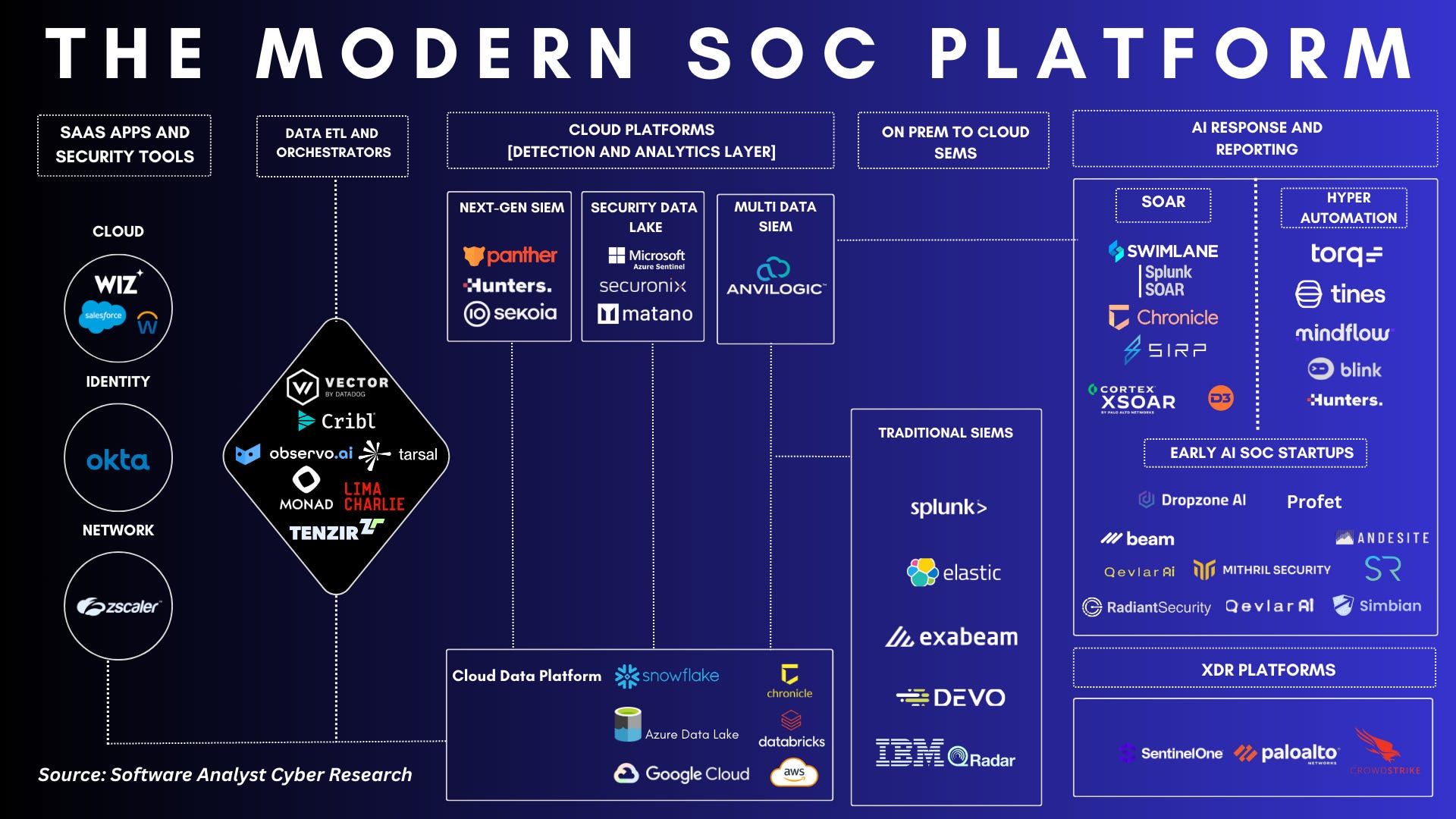

SIEMs, EDRs, XDRs, SOAR platforms — the list of tools grows every year, yet breaches still happen. This has led many teams to believe that security tools are failing.

In reality, most tools are doing exactly what they were designed to do. The real failure lies in how much we expect from them.

Tools Are Built for Detection — Not Understanding

Security tools are excellent at processing data at scale. They collect logs, correlate events, and raise alerts based on predefined logic. What they do not possess is situational awareness. They do not understand business context, intent, or subtle behavioral shifts unless someone explicitly teaches them what to look for.

Yet many organizations deploy tools with the expectation that detection will be automatic and complete. Alerts are treated as answers rather than signals. When nothing fires, teams assume nothing is wrong. This mindset turns detection into a passive exercise instead of an active one.

Modern Attacks Don’t Break Rules — They Follow Them

One of the most common misconceptions is that attacks must violate something obvious. In modern environments, many attacks operate entirely within allowed behavior. Valid credentials are used. Legitimate APIs are called. Trusted SaaS platforms are abused.

From a tool’s perspective, everything looks normal. The authentication succeeded. The request was authorized. The traffic came from a known provider. The absence of alerts is not a failure — it is the expected outcome based on how the tools were configured.

When defenders expect tools to “just know” what is wrong without context, they misunderstand the nature of detection.

More Tools Often Mean More Blind Spots

Ironically, adding more tools often increases confusion rather than clarity. Each platform covers a narrow slice of the environment. Each generates its own alerts, metrics, and dashboards. Without a clear detection strategy, teams end up managing tools instead of threats.

This fragmentation creates false confidence. Visibility feels high because screens are full, but understanding remains shallow. Important signals get lost between products, and responsibility becomes blurred. When something is missed, the assumption is that the tool failed — not that expectations were unrealistic.

Detection Is a Human Responsibility

Security tools do not replace thinking. They amplify it. Effective detection requires people who understand normal behavior, business workflows, and attacker tradecraft. Tools provide raw material; humans provide interpretation.

In many post-incident reviews, the data needed to detect the attack was already available. Logs existed. Events were recorded. The issue was not lack of tooling, but lack of intent-driven analysis. No one was actively asking whether what they were seeing made sense.

Expecting tools to compensate for missing context is a convenient illusion.